Uncovering the primitives of perception so that machines can unerstand us better.

The Model, In nutshells

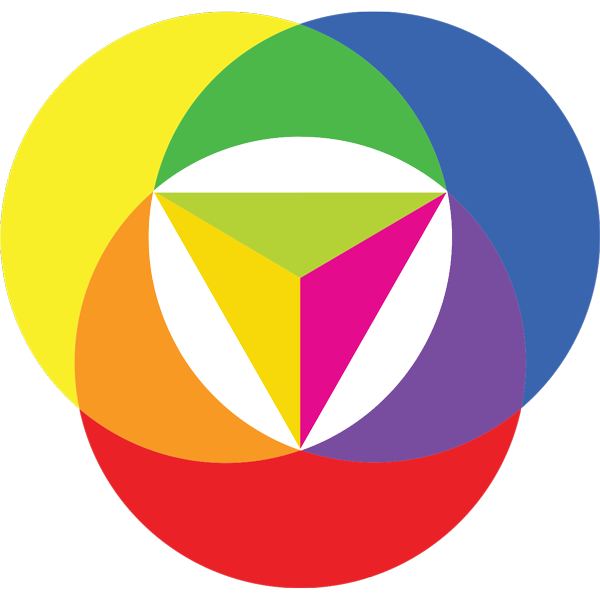

Semantics – Codifying Opposition

The human mind is equipped with multiple redundancies. Though, not all of the same quality.

The human mind is equipped with multiple redundancies. Though, not all of the same quality.